Provocative tweet of the day:

https://twitter.com/jules2689/status/951123635510693889

There are two ways you can help users become proficient with your product. The first is explicit feedback, in which you literally tell them what to do. Documentation/manuals are a common example. The second is implicit feedback, where the user learns by doing. Implicit feedback is great, but a whole bunch of prerequisites have to be in place in order for it work. This is why your toddler doesn’t need to study verb conjugation when they learn a new language, but you do. In the case of language acquisition, the story about prerequisites is complicated. Something about neurotransmitters? I have no idea. In the case of technology, the story is simpler: we can learn by doing if we’ve seen something similar before.

Users spend most of their time on other sites. This means that users prefer your site to work the same way as all the other sites they already know.

– Jakob’s Law

It’s both totally obvious and easy to forget that using computers requires a lot of tacit knowledge. When you see a search bar, checkbox, or dropdown menu, you immediately know what it is and how to use it. This is not because you’re some sort of genius — it’s because you have years of training.

The problem with most chatbots is that they don’t really do either very well. There isn’t a user manual — nor would anybody read it if there was — and there isn’t enough similarity between them that proficiency with one is a transferrable skill.

“best ____ near me”

Gately and associate scanned for a wallsafe, which surprisingly like 90% of people with wallsafes conceal in their master bedroom behind some sort of land or seascape painting. People turned out so identical in certain root domestic particulars it made Gately feel strange sometimes, like he was in possession of certain overlarge private facts to which no man should be entitled. Gately had a way stickier conscience about the possession of some of these large particular facts than he did about making off with other people’s personal merchandise.

– Infinite Jest

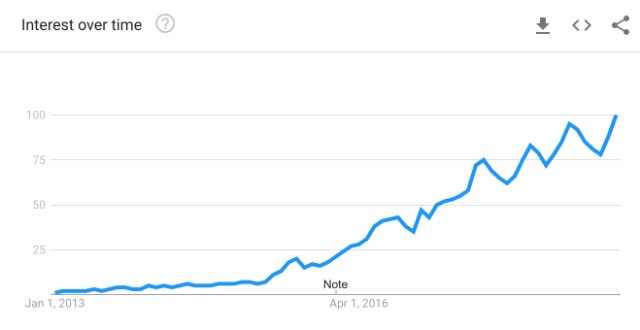

You may think that the way you use Google/Facebook/etc is idiosyncratic, but it’s almost certainly not. It just feels that way because you don’t get to watch anyone else using it. In reality we converge on patterns of behaviour without stating them outright. An oft-cited example is that at some point in 2015 we all began making Google searches of the form “best ____ near me.” Here’s the Google trend for “best sushi near me” over the period 2013-2019:

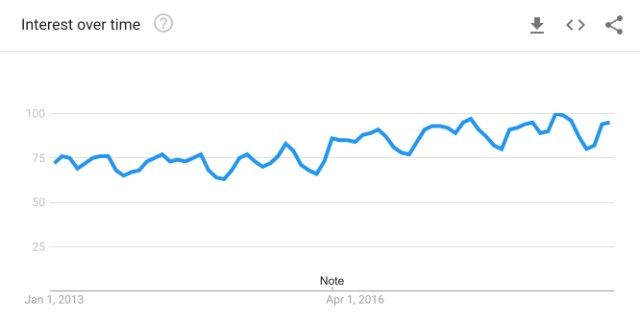

As a control, here’s the trend for “sushi” over the same time period:

The platform tends to play an active role in these developments. The growth in best-near-me searches coincides with Google embedding maps with locations pinned on the results page.

Tech companies are a little like David Foster Wallace’s B&E artist. They get to see the “large particular facts” through logging and user testing. In the biz we call it “aggregate data.” The industry has long been tweaking the standard UI elements to best accommodate our usage. Learned behaviours and UI improvements are symbiotic.

One consequence of all this is many touchstone chatbot features are already being offered by less sexy UI elements with greater efficiency. Probably the two most critical ones are forms, in which you’re eliciting information from the user, and search, in which the user is eliciting information from you. Here is stab at a translation guide between a search bar and a chatbot that more or less does the same thing:

| Chatbot |

Search bar |

| “Can I help you with…” | “autocomplete” |

| “Did you mean…” | showing multiple options |

| “I didn’t understand…” | perform a different search |

| “Do you want type X, Y, Z….” | perform a slightly refined search |

Users naturally leverage all these features, because they’ve been trained to on every site they use. When you introduce a new UI you’re not necessarily making it easier for the user. You might be making it harder, because now they have to figure out what is going on without being able to draw on their past experience.

I’m not dogmatically opposed to chatbots. I just think it’s important to be aware of any cognitive load your product imposes on the user. If you can show that the time spent learning is recouped in the long run, then as long as the learning curve doesn’t hurt adoption, go nuts. Some of Google’s best features (my example included!) necessitate learned behaviour on the part of the user. Most of us use Google daily, so it’s time well spent. But if the product is used once/infrequently, this is probably a poor use case for a UI that doesn’t exploit Jakob’s Law.