A recent press release from NYU celebrates the release of some new video games that “train your brain.” The games were developed by “developmental psychologists, neuroscience researchers, learning scientists, and game designers,” so you can be sure they’re thrilling. There are two issues I want to discuss. The first is their choice of control. The article is paywalled so I don’t know all the details of the experimental setup, but the abstract gives you a fairly good idea:

When students played for 2 h over 4 sessions they developed significantly better performance on cognitive shifting tests compared to a control group that played a different game

…

Experiment 3 replicated the results of Experiment 1 using an inactive control group, showing that playing Alien Game for 2 h resulted in significant improvements in shifting skills

In short, the authors have succeeded in showing that their product is better than nothing and/or random video games (better for what, you ask—don’t worry, we’ll get to that soon). The problem here is that this isn’t a drug trial, where patients have no alternative. This is how you choose to spend your time. There is an opportunity cost incurred when you decide to spend hours of your life playing a video game designed by academics. You could have spent that time reading, exercising, studying, playing chess, or any number of other activities. Put differently, if someone said “you have a couple hours to improve your cognitive function, go” your first thought probably wouldn’t be “hmm let’s kill some pedestrians in Grand Theft Auto.”

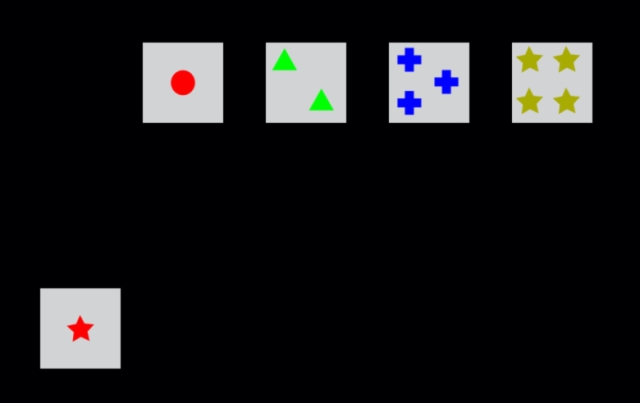

The other issue regards one of my recent obsessions: construct validity. The press release talks about “cognitive skills.” By contrast, the journal article talks about “performance on cognitive shifting tests.” Are these the same thing? You be the judge; you can take a cognitive shifting test here. For those of you who don’t want to, here’s a screenshot:

The idea is that you click one of the four cards above that is similar to the card in the bottom left. Which similarity you are supposed to care about (colour, number, shape) depends on the test. Once you begin the task, you get feedback whenever you click the wrong card. This way, you can deduce whether you’re supposed to use colour, number or shape.

It would be pretty easy to build a video game that is similar to this. As someone gets better at your video game, of course they are going to get better at this task. It would be astonishing if they didn’t. But it’s a flatly circular exercise. We’re not interested in the test for its own sake, we’re interested in the test as a barometer for more general cognitive skills. Once we start optimizing for test scores, it loses its value as a signal for higher level traits. The authors have essentially shown that people who practice sorting cards get better at sorting cards than people who don’t practice. I could have told you that.

My aim here isn’t to go after the authors; in the first place they wouldn’t care, and in the second place they aren’t doing anything genuinely nefarious. I wrote this post because there is a good general lesson about control groups here. I should add that I’m strictly talking about software products. I don’t know anything about epidemiology, and there might be good reasons why this argument doesn’t hold in that domain.

The lesson is that your control shouldn’t, by default, be “nothing,” nor should it be “something superficially similar to the treatment.” It should be the opportunity cost of treatment. If we don’t have anything good available for the same price, then “nothing” is fine, but if we do and you neglect to compare against it, you’re not proving anything.